Hi there!

From Meenal’s newsletter:

Bicycle face is a term that was coined in the late 19th century to describe a supposed physical condition that was believed to afflict women who rode bicycles. According to this theory, the act of riding a bicycle could cause a woman’s face to become distorted and unattractive, with bulging eyes, flushed cheeks, and a tight-lipped expression.

So the next time you hear about a new technology that’s causing anxiety or fear, remember the term bicycle face and take it with a grain of salt. After all, the future is always uncertain, but that’s part of what makes it so exciting!

Five Stories

I wonder how this AI thing is going to shape up

Harshvardhan

Revolutionary changes are cyclical. First was agriculture. More recent one was the growth of industries, powered by pooling. It looks like AI is the latest one.

In this blog post, I wonder the potential of artificial intelligence on modern society. There hasn’t been a better catalyst for productivity jump in centuries. One can note the cyclical nature of fundamental changes in history, such as agriculture and industry, and how they have both positive and negative impacts on society.

I also discuss Overton window, a critical tool for decision making on complex issues. It is useful to identify the range of ideas or models that are acceptable to the public, and then to work within that range to make decisions that are more likely to be accepted. By doing so, decision-makers can increase the chances of success and minimize resistance or backlash from the public.

modeltime: Time-series Forecasting with Tidymodels

Matt Dancho

Time-series forecasting in R requires a bunch of scattered models and packages. Many of them have different design philosophies. If you want to use machine learning on them, the work is even harder.

`modeltime` unlocks time-series models and machine learning using a single framework. It supports forecast package (ARIMA, ETS), Meta’s Prophet and Tidymodels (Random Forest, Boosted Tree, MARS, SVM), and more.

GPT-4 is here

OpenAI

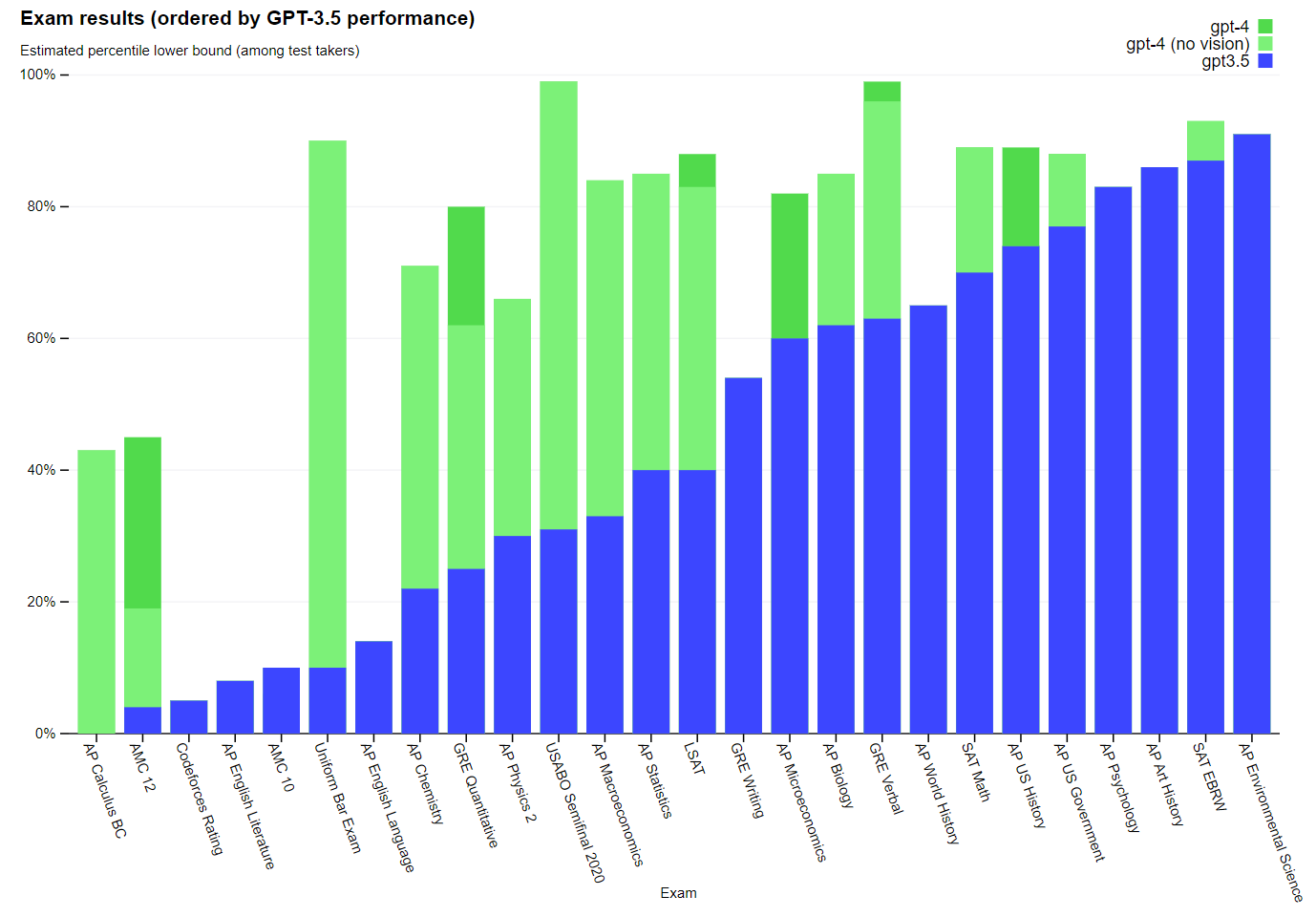

GPT-4 is the latest in OpenAI’s arsenal: a large multimodal model that can accept images and text inputs, while emits textual output. It can pass bar exams ranking in the top 10% of candidates (ChatGPT was in bottom 10%). It is available via ChatGPT Plus and API (waitlist).

Ten Techniques Learned From fast.ai

Samuel Lynn-Evans

The article highlights some of the current best practices in deep learning and how to implement them using the fast.ai library. It covers differential learning rates, which involves altering the pre-existing architectures of deep learning models and only finely tuning the last layers. Finding the right learning rate is essential, and this can be done using a trial run and increasing the learning rate exponentially.

The optimum learning rate is determined by finding the value where the learning rate is highest and the loss is still descending. The article also touches on cosine annealing, which is implemented automatically by the fast.ai library and helps decrease the learning rate following the cosine function as the stochastic gradient descent progresses.

20 random tips and tricks for working with R, Rmarkdown, and RStudio

Ashirwad Barnwal

In this talk, Ashirwad shares many helpful tricks for working with R. Some really good ones:

Handle ambiguous function names elegantly using the conflicted package

Use latex2exp package to convert latex into plotmath expressions

Use crop and optipng knitr chunk options for cropping and optimizing png plots

Use datapasta package for copying and pasting data to and from R

Four Packages

conflicted: The package conflicted provides an error-based approach to conflict management in R, forcing the user to choose which function to use when conflicts arise. Github.

latex2exp: The TeX function in latex2exp parses a LaTeX string and returns a plotmath expression usable in graphics, including for labels, legends, and text in both base graphics and ggplot2. Vignette.

datapasta aims to make copying and pasting data to and from R easier by providing add-ins and functions that support a wide range of input and output situations, reducing the need for intermediate programs like Sublime or MS-Excel. Vignette.

modeltime: This is a time series forecasting framework designed to be used with the tidymodels ecosystem, which includes models such as ARIMA, Exponential Smoothing, and other time series models from the 'forecast' and 'prophet' packages.

Three Jargons

Hallucinations

Hallucinations in AI learning refer to when an AI system generates or outputs data or information that is not based on reality, but rather on the patterns and associations it has learned from the training data.

For example, an image recognition system trained on a dataset of dogs might generate an image of a "dog" that has multiple heads or legs, simply because it has learned that the presence of certain features (e.g. fur, ears) are strongly associated with the label "dog" in its training data, without understanding what a real dog actually looks like.

In some cases, these hallucinations can be harmless or even amusing, but in other cases they can have serious consequences, such as when an autonomous vehicle "sees" a non-existent object and causes an accident, or when a chatbot generates offensive or harmful responses based on its training data.

Few-shot Learning

Few-shot learning is a subfield of machine learning that focuses on the ability of a model to learn new concepts and generalize to new examples with only a few labeled examples.

One approach to few-shot learning is to use meta-learning, which involves training a model on multiple learning tasks so that it can learn to learn from only a few examples. For example, a model could be trained to recognize handwritten digits, and then given a new set of digits with only a few labeled examples for each digit, the model could quickly adapt and learn to recognize the new digits.

Another approach is to use data augmentation techniques to generate new examples from existing labeled data, which can help improve the model's ability to generalize to new examples.

Generative Models

A generative model is a type of machine learning model that is designed to generate new data that is similar to the training data it was trained on. Unlike discriminative models that are used to classify input data into different categories or labels, generative models try to learn the underlying distribution of the data and generate new samples that follow that distribution.

Two Tweets