Multimodal Learning | Issue #87

Humans have five senses. How many does AI have?

Hi there!

Last month, I gave an introduction to how can one fine-tune large language models, culminating in last week's how to fine tune with almost anything using embedchain.

Today, I want to talk about the next step that's still in works: multimodal learning. It is the combination of learning from various modalities of data. Generally, most input data is in a single form (unimodal): text, image, video, audio, etc. A multimodal model promises to combine knowledge from various inputs.

More data on the same inputs may have more information. Sometimes it is more straightforward to use an image to describe the information which may not be obvious from texts. It is also very common to caption an image to convey the information not presented in the image itself.

How does multimodal learning work? Let's learn about it today.

When we talk about multimodal learning, think of it like a super-chef who's equally adept at baking, grilling, and sautéing. While a regular chef might specialize in one, this super-chef combines expertise from all three to make a fantastic dish. Similarly, instead of processing just images or just texts, multimodal models can handle both and even more.

How does multimodal model work?

At the heart of multimodal learning, there are three main parts:

Unimodal Encoders: This is where each type of input data gets processed. Imagine you have a video clip with an audio commentary. One encoder would handle the video, and another would handle the audio. Each encoder is busy transforming its specific data into a language the model can understand.

An encoder is typically composed of several layers of neural networks that use non-linear transformations to extract latent/abstract features from the data.

Fusion Network: Once our encoders have done their job, we need a way to combine their outputs. This part does that. It's like blending the ingredients of a recipe to ensure every bite is flavorful.

When passing the encodings into a fusion network, one can concatenate them into a single vector (popular), or use attention or self-attention mechanisms to weight contributions of each modality based on task.

Classification or Decision Making: After fusing, we now have a combined understanding of our data. This part uses that understanding to make decisions or predictions, like identifying what's happening in a video or generating a caption for it.

They’re also neural networks but the usual ones you’ll find in textbooks. They have several layers with one or more fully connected layers, non-linear activation function, dropouts, and more attenuations to reduce overfitting.

Why Go Multimodal?

There's immense power in combining data types. For instance, while watching a movie, understanding both the visuals and the dialogues helps us grasp the story better. Similarly, a system that can look at photos and read descriptions will have a richer understanding compared to a system that only does one.

Meta AI (whose specific efforts I will cover later) says:

Humans have the ability to learn new concepts from only a few examples. We can typically read a description of an animal and then recognize it in real life. We can also look at a photo of an unfamiliar model of a car and anticipate how its engine might sound. This is partly because a single image, in fact, can “bind” together an entire sensory experience.

In the field of AI, however, as the number of modalities increases, the lack of multiple sensory data can limit standard multimodal learning, which relies on paired data. Ideally, a single joint embedding space — where many different kinds of data are distributed — could allow a model to learn visual features along with other modalities.

There are three immediate use-cases, of many many more:

Visual question answering (VQA): show a picture and ask a question about it,

Text-to-image generators: like Dall-e, Midjourney, and like,

Natural language for visual reasoning: teaching machines to imagine based on provided text.

These are not the only use-case. Rather, OpenAI and Microsoft researchers were quite shocked to learn that teaching model to see (VQA) improved their reasoning ability; or borrowing LLM architecture for speech-to-text creates a better text format than otherwise. This is a hot topic of research and there’s a lot going on.

Meta’s Efforts

Meta has been leading the open-source research on multimodal models. Check their blog on several foundational models they’ve researched to public: creating single algorithm for vision, speech, and text (Data2vec), building foundational models that work across many tasks (FLAVA), to finding the right model parameters (Omnivore), and many others. Models like Make-a-sketch, where you doodle something and give a prompt to improve, has eaten hours from my schedule.

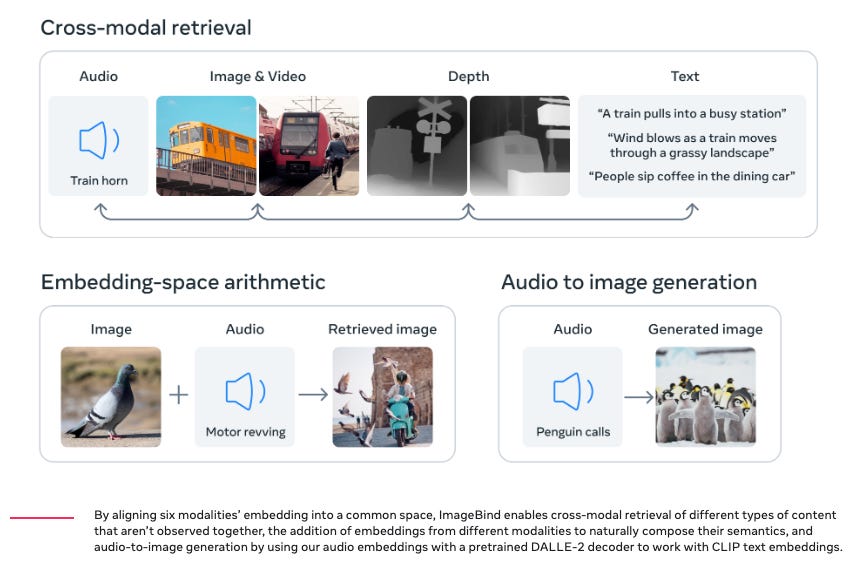

In May 2023, Meta AI released ImageBind — the biggest step.

Today, we’re introducing an approach that brings machines one step closer to humans’ ability to learn simultaneously, holistically, and directly from many different forms of information — without the need for explicit supervision (the process of organizing and labeling raw data). We have built and are open-sourcing ImageBind, the first AI model capable of binding information from six modalities.

The model learns a single embedding, or shared representation space, not just for text, image/video, and audio, but also for sensors that record depth (3D), thermal (infrared radiation), and inertial measurement units (IMU), which calculate motion and position. ImageBind equips machines with a holistic understanding that connects objects in a photo with how they will sound, their 3D shape, how warm or cold they are, and how they move.

SeamlessM4T is another cool model from them. It is an all-in-one multilingual multimodal AI translation and transcription model that supports up to 100 languages. You can transcribe audio to text, translate audio to audio, perform text to audio, and of course, text to text. One day, we will have the Babel Fish from The Hitchhiker’s Guide to the Galaxy.

If you’re looking to try: OpenAI demoed this capability of VQA many months ago, and VQA has been possible with Bing Chat for a few months now.

Conclusion

In today’s letter, I covered what are multimodal models, how do they work and what are their usecases at present. KDNuggets’ article has several other examples on how multimodal learning can be helpful. Hope you enjoyed the article. Let me know your thoughts or if there’s a specific subject you’d like to learn more about.

Links

Can you make Gandalf reveal password with your LLM prompting?

‘Life or Death:’ AI-Generated Mushroom Foraging Books Are All Over Amazon

See you next week!