Hi there!

Last week, I was at ACM SIGKDD 2023. I presented my research work on End-to-End Inventory Prediction and Contract Allocation for GD Advertising. In a constrained environment where we have limited inventory and uncertain demand from our customers, it is critical to do two things right: make a good demand prediction, and make a good inventory allocation to the demand. Traditionally, this has been solved as a two-stage problem. We proposed a novel Neural Langrangian Selling (NLS) model that combined the two steps into one, outperforming existing methods. Read on: https://www.harsh17.in/kdd2023/.

Additionally, I learnt a lot about Large Language Models. They were a hot topic, and while there were many topics for presentation at the conference, I was glued to LLMs. Here are my notes on what I found interesting.

Without further ado, let’s dive in to today’s topic.

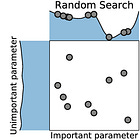

This is the third part in the series of explaining hyperparameters. The first part explained what are hyperparameters, how do they affect machine learning models, and how to choose them. I explained three basic approaches: grid search, random search and Bayesian search. In the second part, I introduced Optuna which is an automatic tuning package where the model’s parameter are chosen automatically given the model, search range, etc. This utilities the concept of Bayesian learning where previous hyperparameter search results give us good information on where to search next.

Today, we take it a step further. Why choose the model at all? Ultimately, we have a task at hand — regression, classification, etc. There are several models which can do that task (XGBoost, LightGBM, Random Forest, etc.), so there are choices not just for the hyperparameters but also for the model itself.

FLAML (Fast and Lightweight AutoML) understands this performs the two stage Bayesian optimisation. It chooses the model, tries a variety of hyperparams, and returns the best model. Every step is automated. You just provide X_train, y_train, (optionally also) X_val and y_val. You can provide a lot more, but that’s the minimum.

Let’s look at FLAML more closely.

What is FLAML?

FLAML is a library that aims to free data scientists and developers from the tedious and time-consuming task of hyperparameter tuning and model selection. It enables you to build self-tuning software that adapts itself with new training data.

FLAML has several advantages over other AutoML libraries:

It is fast: FLAML can find quality models for your data within minutes or even seconds, depending on your computational budget. You can set total training time or the number of iterations.

It is economical: FLAML can save you money by using less resources than other AutoML libraries. It automatically adjusts the sample size, number of trials, and evaluation cost according to your budget.

It is customizable: FLAML allows you to specify your own search space, metric, constraints, guidance, or even your own training/inference/evaluation code. You can also use FLAML with any existing machine learning framework or library.

How does FLAML work?

FLAML works by using a smart search algorithm that balances exploration and exploitation. It starts with a small sample size and a simple model, and gradually increases the complexity and diversity of the models and hyperparameters. It also leverages historical information and domain knowledge to guide the search process, a.k.a. Bayesian search that I described earlier.

FLAML supports different types of models and tasks:

For common machine learning tasks like classification and regression, FLAML quickly finds quality models for your data with low computational resources. It automatically tunes the hyperparameters and selects the best model from default learners such as LightGBM, XGBoost, random forest etc.

For time-series tasks, it uses SARIMAX, Prophet, in addition to learners for regression.

For user-defined functions or custom learners, FLAML allows you to specify your own training/inference/evaluation code and optimize it with respect to any metric.

Under the hood, FLAML uses several optimization strategies like multi-fidelity optimization to search the hyperparameter space efficiently. The key ideas are:

Sample hyperparameter configurations selectively based on past learnings

Evaluate model performance on a subset of data to quickly estimate tuning progress

Calculate “Estimated Cost of Improvement” to check if changes in hyperparameter make sense

Focus sampling on more promising configurations, balancing exploration and exploitation

Transfer knowledge from past tuning runs to speed up search

These mechanisms enable FLAML to tune complex models with minimal computational resources. The multi-fidelity aspect evaluates models quickly, then concentrates compute on refining the most promising ones.

In fact, it performs better at several tasks than existing packages.

FLAML is a fast and lightweight AutoML library that can handle various types of models and tasks with low computational cost and high customizability. It is easy to install and use, and it can save you time and money by automating the hyperparameter tuning and model selection process.

If you are interested in learning more about FLAML, you can check out FLAML’s GitHub page or their many examples for specific tasks including classification, regression, NLP, ranking, and time-series forecasting.

You can find the complete details on the algorithm in their paper here: FLAML Research Paper. Hope you enjoyed learning about FLAML! Let me know if you have any feedback via commenting, and show your love hitting the ♥︎ button on Substack!

See you next week!

— Harsh